Table of Content

- How to Handle AI Hallucinations in Travel Apps

- How Accurate Does AI for Travel Booking Need to Be?

- How Biased Data Can Break AI in Travel Software

- How to Reduce Vendor Lock‑in When Using AI APIs in Travel

- How to Manage Latency When Using AI for Travel Industry Apps

- What Legal and Compliance Risks AI Creates for the Travel Business

- What to Realistically Expect from AI in Travel

Schedule an AI risk and mitigation consultation

Book a callWhen you go to an AI conference, everyone talks about how great AI is, and how helpful, and yes, in some cases it is indeed helpful, in others, it may not be so much, and partial, because noone talks about how hard it was to integrate AI correctly, avoiding risks and crashing the entire system, that was build for years.

The demo was flawless. The pilot group loved it. And then it went live, and an LLM confidently told a customer that a beachfront property had “private beach access” when it was actually five miles inland. Or a fraud detection model flagged so many legitimate bookings that conversion dropped 15% before anyone noticed.

AI introduces categories of risk that traditional software development doesn’t face. Understanding these risks – and building for them from the start – is the difference between AI features that enhance your product, and AI features that erode customer trust.

Let’s walk through what can actually go wrong.

Design AI features that don’t break trust

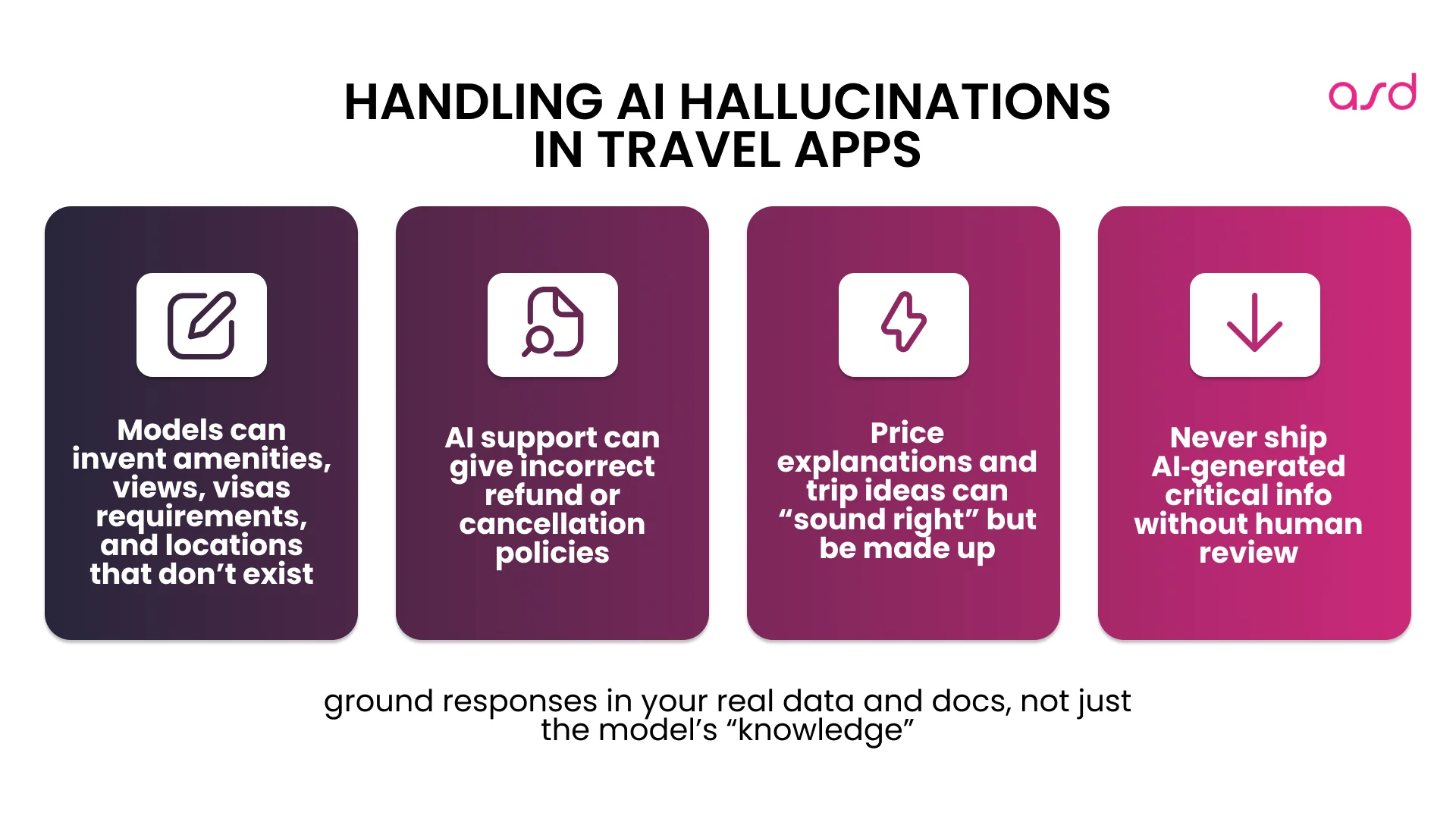

How to Handle AI Hallucinations in Travel Apps

Large language models don’t “know” things the way humans do. They generate plausible-sounding text based on patterns. Usually, those patterns produce accurate content. Sometimes they produce confident nonsense – hallucinations.

In travel software, this creates specific problems:

Property descriptions can invent features. The model might claim amenities that don’t exist, describe views the property doesn’t have, or place hotels in neighborhoods they’re not actually in. If a generated description says “rooftop pool with city views” and there’s no rooftop pool, that’s a legal and reputation problem.

Support responses can provide wrong policy information. An AI-generated answer about cancellation deadlines or refund terms might sound authoritative while being completely incorrect. Customers who act on that information have legitimate grievances.

Pricing explanations can invent logic. Asked why rates differ between dates, an LLM might fabricate explanations rather than admitting it doesn’t know the supplier’s pricing rules. “The rate is higher because of a local festival” sounds reasonable, but if there’s no festival, you’ve just made something up.

Itinerary suggestions can recommend closed venues. When developing AI trip planner software, models might suggest restaurants that closed last year or attractions with outdated hours. The model has no way to know what’s currently operating unless you explicitly provide that data.

How to Mitigate Hallucinations

Never use AI-generated content for critical information without human review. Anything that affects bookings, cancellations, refunds, or creates contractual obligations needs human oversight.

Implement validation checks comparing AI output against source data. If you’re generating property descriptions, compare the amenities mentioned against your structured amenity data. Flag discrepancies for review.

Use retrieval-augmented generation (RAG) to ground responses in actual documentation. Rather than letting the model generate from scratch, give it your actual policy documents and ask it to answer based only on that source material.

Add disclaimers on AI-generated content where appropriate. A simple “AI-generated suggestion” disclaimer sets appropriate expectations and helps frame the content appropriately.

The goal isn’t to eliminate hallucinations – current technology can’t do that. The goal is to catch them before customers see them.

How Accurate Does AI for Travel Booking Need to Be?

Machine learning models make mistakes. Even a model with 90% accuracy is wrong 10% of the time. At scale, that 10% becomes thousands of errors.

Consider fraud detection for AI for travel booking systems. A model with 95% accuracy sounds impressive – until you realize that with 100,000 transactions per month, you’re making 5,000 wrong calls. If half of those are false positives, you’re annoying 2,500 customers every month.

Search ranking mistakes mean missed bookings. If your AI occasionally buries the perfect property on page 3, users never see it, and you lose the booking.

Recommendation errors create poor experiences. Recommend poorly-matched properties often enough, and users stop trusting your suggestions.

The same math applies to classification, recommendations, and predictions. Real-world accuracy is often 80-90% for complex tasks, and you need to design for the 10-20% that’s wrong.

How to Mitigate Accuracy Issues

Set realistic accuracy expectations from the start. When stakeholders hear “90% accurate,” they often hear “basically perfect.” Make the failure cases concrete.

Implement confidence thresholds so you only act on high-confidence predictions. Only auto-approve fraud scores above 95% confidence. Send middle-range scores to human review.

Build human review processes for edge cases. If AI handles 70% with high confidence and routes 30% to humans, that’s still a massive efficiency gain.

Design graceful degradation. If you’re not confident, fall back to non-AI behavior rather than guessing. Being conservative preserves trust.

The teams that ship successful AI features are the ones who build for the failure modes, not just the happy path.

How Biased Data Can Break AI in Travel Software

AI models learn from your data. If your data has problems, your AI inherits them – and sometimes amplifies them.

Training Data Biases

If your historical bookings over-represent business travelers, your recommendation system will under-serve families. Geographic bias means weak international recommendations if most users book domestically. Temporal bias affects seasonal accuracy when training data skews toward certain seasons.

Data Drift

Customer behavior changes over time. A model trained on 2024 patterns may not perform well on 2026 patterns. Seasonal shifts, market changes, and external events can make trained models obsolete quickly.

Even without dramatic shifts, gradual drift is inevitable. User preferences evolve, new competitors emerge, and supplier strategies shift.

Incomplete Data

Training fraud detection on data that doesn’t include recent attack patterns means the model can’t detect new fraud vectors. Fraudsters adapt. A model trained on last year’s attack patterns won’t recognize this year’s techniques.

Training recommendations without sufficient data from certain user segments means poor recommendations for those users. If you have limited data from budget travelers or luxury seekers, your model won’t serve them well.

How to Mitigate Data Quality Issues

Invest in data quality before building AI features – it’s genuinely that important. Clean data is the foundation on which everything else builds on. No amount of clever modeling fixes fundamentally flawed training data.

Audit training data for bias and completeness. Before training, examine your data distribution. What segments are overrepresented? Which are missing? Are all regions, seasons, and booking patterns adequately represented? Document gaps and make conscious decisions about how to address them.

Monitor for data drift with automated retraining triggers. Set up monitoring that compares current data distribution to the training data distribution. When they diverge significantly, trigger a retrain. For many travel applications, quarterly retraining is a reasonable starting point.

Ensure training data covers edge cases and underrepresented scenarios. You may need to deliberately oversample rare-but-important cases. Fraud examples, cancellations, and unusual booking patterns should be represented even if they’re statistically rare.

How to Reduce Vendor Lock‑in When Using AI APIs in Travel

Most travel startups (sensibly) use AI APIs from OpenAI, Anthropic, or similar providers rather than building infrastructure from scratch. This creates dependencies you need to plan for.

Discover insights into travel API integration in 2026

Service Reliability

When OpenAI has an outage, your AI features stop working. If AI is in your critical path, your platform stops working. Even without full outages, API latency spikes are common. Your 500ms AI feature might occasionally take 5 seconds when the provider is under load.

Pricing Changes

AI providers may increase prices or change rate limits with limited notice. If your unit economics assume current API pricing, budget for potential increases. Some teams have seen API costs double with model updates or pricing changes.

API Changes and Deprecations

Providers deprecate models and change behavior. The model you built on might be sunset in 18 months. API contracts aren’t guaranteed stable – response formats change, parameters shift, and rate limits adjust.

Travel and Booking APIs: Сonnectivity Landscape

Data Privacy Implications

Sending customer data to third-party AI APIs has privacy implications. What happens to your data? Where is it processed? Can it be used for training? For generative AI in travel tech, these questions are critical.

GDPR requires knowing where data is processed and having data processing agreements. Some providers don’t train on API data; others don’t offer those guarantees. Read the terms carefully.

How to Mitigate Vendor Lock-in

Build fallback behavior for every AI-dependent feature. If the AI API is down, what happens? Can you fall back to templates or queue the request?

Build abstraction layers that let you swap providers. Don’t couple your application directly to one provider’s API. Switching providers should mean changing one adapter, not rewriting your application.

Monitor API costs closely and set budget alerts. Set up cost tracking per feature and alerts when usage exceeds projections.

Get data processing agreements in writing. Ensure you have contracts specifying how data is used and that it won’t be used for training. For travel and hospitality platforms, this is non-negotiable.

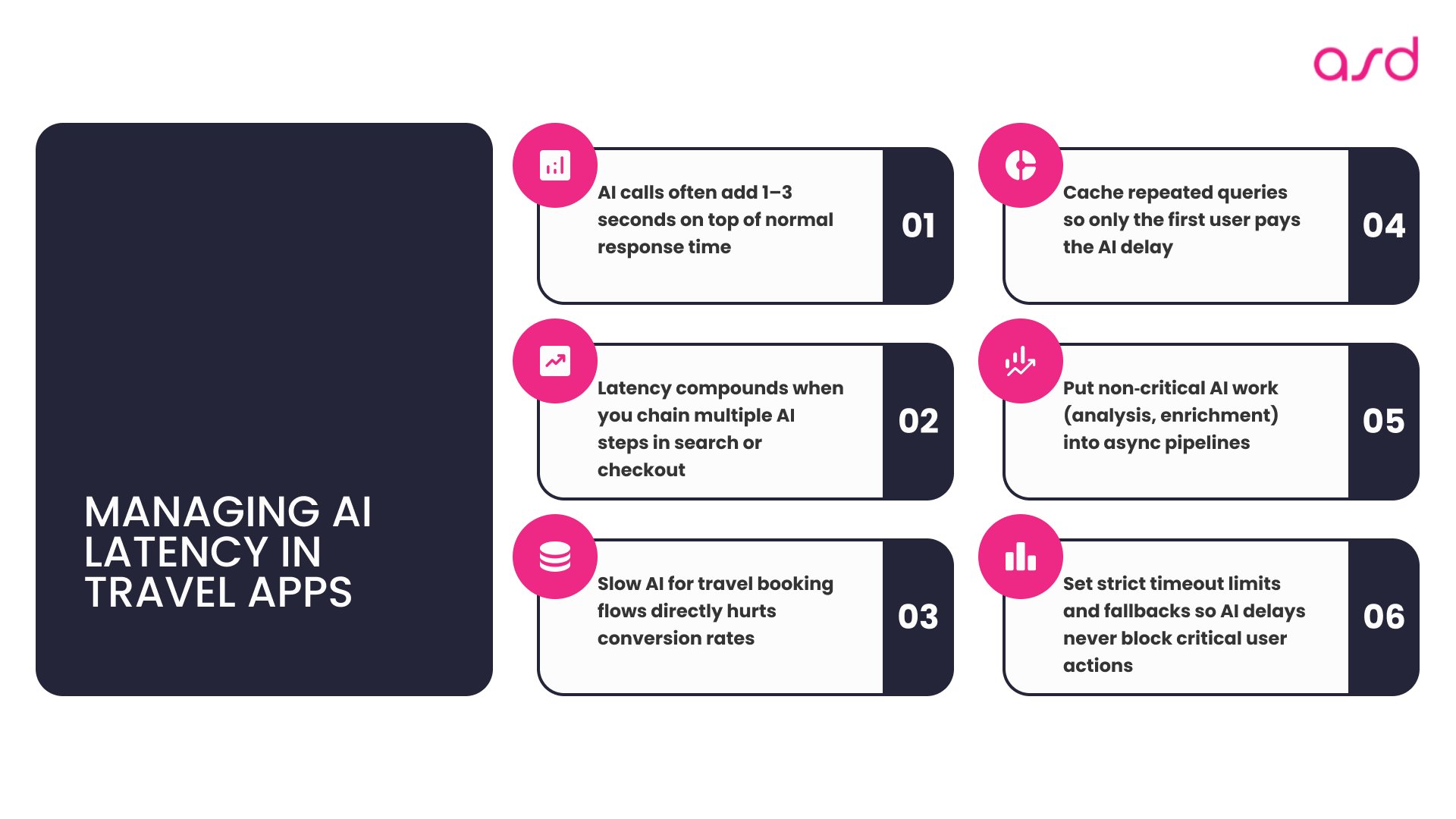

How to Manage Latency When Using AI for Travel Industry Apps

AI processing takes time. Local model inference might be 50-100ms. External API calls typically add 1-3 seconds. These delays compound and can significantly impact conversion rates.

How to Mitigate Latency Issues

Benchmark AI processing times in production conditions. Load test with concurrent requests and realistic data volumes.

Implement aggressive caching for repeated queries. Your first user pays the latency tax; the next 100 get instant results.

Use async processing wherever users don’t need immediate results. Generate enhanced descriptions offline. Analyze bookings after completion.

Set timeout limits preventing AI delays from blocking critical flows. If reranking doesn’t respond in 300ms, fall back to default sorting.

Consider cheaper, faster models for less critical use cases. Save expensive models for where quality really matters.

What Legal and Compliance Risks AI Creates for the Travel Business

AI regulation is evolving fast. What’s a gray area today may be a compliance requirement tomorrow.

GDPR and Automated Decision-Making: If AI makes decisions affecting customers (like denying a booking), GDPR gives customers the right to an explanation and human review.

Bias and Discrimination: AI models can perpetuate or amplify biases. If your fraud detection flags a disproportionate number of bookings from certain regions or demographics, you could face discrimination claims.

Liability for AI-Generated Errors: If AI-generated property descriptions contain false claims, who’s liable? Most AI provider terms explicitly disclaim liability, which means it falls on you.

How to Mitigate Legal Risks

Get legal review of AI features before launch. Have lawyers review generated content, automated decisions, and data usage.

Implement audit trails for AI decisions. Log inputs, outputs, confidence scores, and decision factors so you can explain what happened.

Include clear terms explaining AI usage to customers. Transparency helps set expectations and may provide some legal protection.

Monitor for bias in model outputs. Regularly audit model decisions across different demographics and user segments.

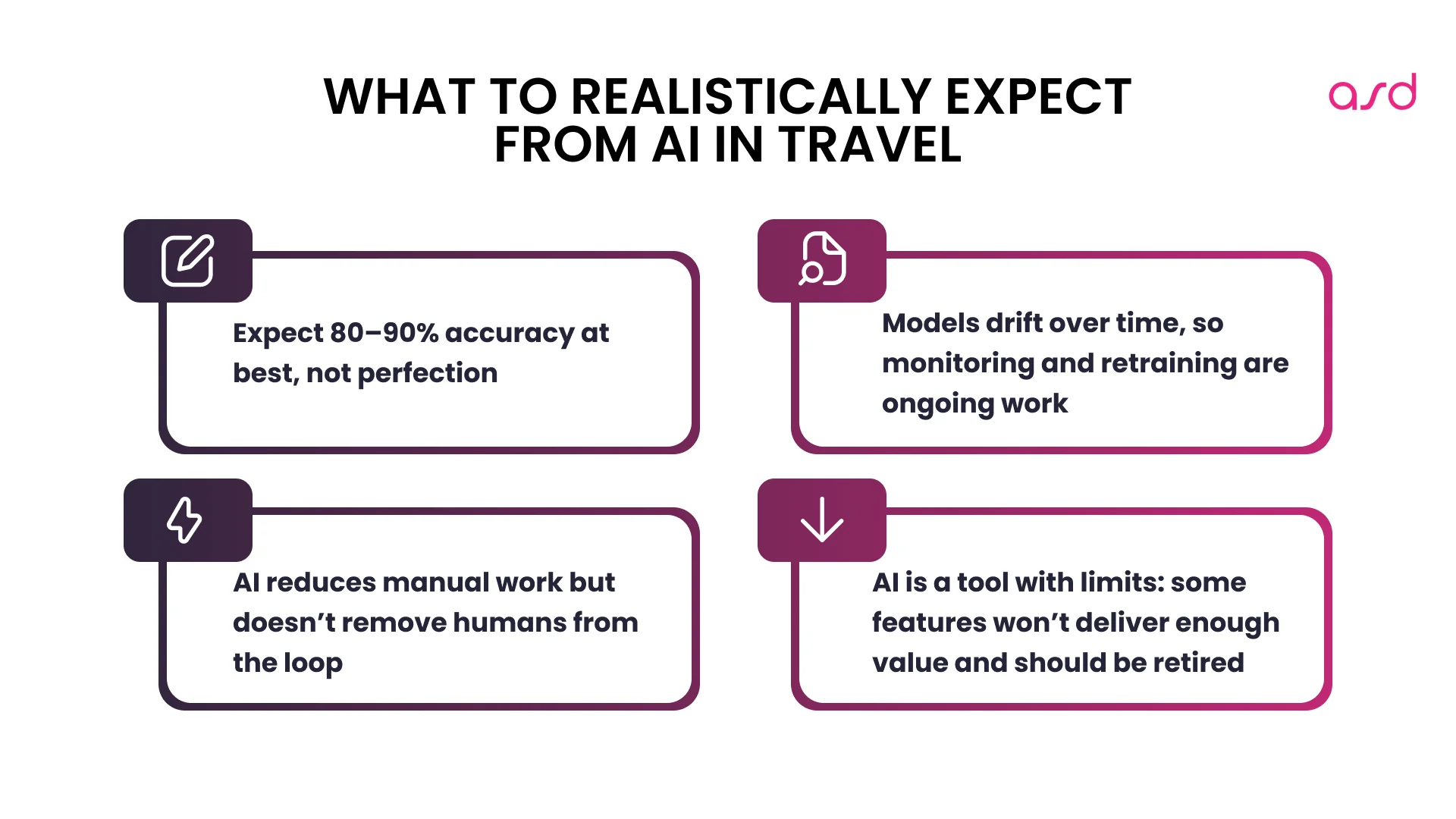

What to Realistically Expect from AI in Travel

AI delivers real value in travel software, and there are genuine competitive advantages to be gained. But it’s not magic. Here’s what to actually expect:

Expect 80-90% accuracy, not 100%. Even the best models make mistakes. Design for graceful handling of errors.

Plan for ongoing maintenance and monitoring. Models drift and patterns change. Budget engineering time for monitoring and improvement.

Budget for human oversight. AI reduces manual work, it doesn’t eliminate it.

Assume you’ll need to iterate after launch. Your first version won’t be perfect.

Accept that some use cases won’t work as hoped. Have a plan to deprecate gracefully when features don’t deliver value.

The teams that build lasting AI advantages in the AI for travel industry are the ones who go in with clear eyes about limitations. They build for failure modes, invest in monitoring, and treat AI as a tool rather than a solution.

Stress‑test your AI idea before launch Walk through hallucinations, false positives, and vendor lock‑in, and leave with a mitigation plan tailored to your travel product.

Questions? Answers!

How do I know if my AI accuracy is good enough for production?

Start by defining what “good enough” means for your specific use case. For fraud detection, you might need 95%+ precision (few false positives) even if recall is lower. For recommendations, 75% accuracy might be fine since users browse multiple options. The key is understanding the cost of different error types. A false positive in fraud detection annoys a customer; a false negative costs you money. Calculate the expected cost of errors at your scale and compare against the value AI provides. If your 85% accurate model processes 10,000 requests monthly and gets 1,500 wrong, what’s the impact of those errors versus the benefit of automating the 8,500 correct ones?

What’s the best way to detect when my AI model starts degrading?

Build monitoring dashboards that track key metrics over time: accuracy/precision/recall on held-out test data, prediction confidence distributions, error rates by category, and latency. Set up automated alerts when metrics drop below thresholds. More importantly, track business metrics: conversion rates on AI-ranked results, customer satisfaction scores on AI-generated content, support ticket volume for AI-assisted features. Models often degrade gradually, so look for trends, not just absolute thresholds. Monthly retraining works for many travel applications, but set up monitoring that would catch issues between retraining cycles.

Should I build my own models or use external AI APIs?

For most travel platforms, start with external APIs (OpenAI, Anthropic, Google, AWS). They handle infrastructure, updates, and scaling. Only consider building your own when: (1) you have extremely high volume where API costs exceed self-hosting costs, (2) you need sub-100ms latency that external APIs can’t provide, (3) you have proprietary data that you legally can’t send to third parties, or (4) your use case is so specific that general-purpose models don’t work well. Building your own means hiring ML engineers, managing infrastructure, and handling model updates – only worth it at significant scale or for core differentiating features.

How do I handle AI hallucinations in customer-facing features?

Layer multiple validation strategies: (1) Use RAG to ground responses in your actual data rather than letting models generate freely, (2) implement automated validation that cross-checks generated content against source data, (3) add confidence thresholds and only show high-confidence outputs automatically, (4) build human review queues for medium-confidence content, (5) add appropriate disclaimers (“AI-generated suggestion” for recommendations), and (6) make it easy for customers to report issues. Most importantly, never use AI for contractual information (policies, pricing, terms) without human verification. The cost of a single hallucination that leads to a customer dispute usually exceeds the cost of human review for thousands of items.

What legal protections do I need before deploying AI features?

Consult with lawyers familiar with both AI and your jurisdiction, but generally you need: (1) updated terms of service explaining AI usage and limitations, (2) data processing agreements with AI providers specifying how customer data is handled, (3) audit trails for automated decisions that affect customers (GDPR requirement in EU), (4) processes for human review of automated decisions when customers request it, (5) bias monitoring and mitigation strategies, especially for decisions around pricing, availability, or fraud, and (6) clear disclaimers on AI-generated content. For travel platforms, pay special attention to false advertising laws – AI-generated property descriptions that invent amenities create liability. Insurance policies may not cover AI-related claims, so review your coverage and consider AI-specific riders.